God From The Machine

Many an hour of my adolescence was spent sitting at a screen, basking in its fluorescent glow, as I jumped, fought, stole, climbed, or flew my way to victory in one video game or another. But as I look back on my early teenage years, there was one non-video-game website that never failed to amuse in any social setting— Cleverbot. The latest iteration of the program having launched sometime around 2006 or 2007, Cleverbot is a program that uses machine learning to have “conversations” with humans. I put conversation in quotes, because, well—

The program has just enough of a grasp on grammar to feign conversation, but not particularly well. Playing around with the program now, several years later, I’m surprised by its sophistication, but it certainly wouldn’t pass any serious formulation of the Turing test. Instead of landing in the uncanny valley, where we land is more like the trivial-and-amusing valley. I look back with a smile on gathering around a clunky, 90’s era monitor with friends, trying to come up with the funniest and most outlandish input possible. Usually these took the form of the most vicious and crude insults we could muster. Cleverbot itself would usually play up some vague “hurt feelings,” or more often than not respond with a complete non-sequitur… although the best response would be those rare instances in which Cleverbot would insult you back.

After my middle school years, I didn’t think about Cleverbot too much. That is, until November of last year, when OpenAI unleashed ChatGPT on the world. Based on the organization’s GPT3 language model, ChatGPT applies a conversational interface in much the same way as Cleverbot did, but this program is no joke. Where Cleverbot trips over its own feet and often misinterprets input, ChatGPT can write coherent essays, offer realistic recipes, explain advanced concepts across many disciplines, write and explain code, and much more. I just asked ChatGPT what the best programming language is— Software Engineering is my day job— and I got an intelligent, well-reasoned answer:

“It is difficult to say which programming language is the "best" as it depends on the specific use case and the individual preferences of the programmer. Different programming languages excel in different areas, such as performance, readability, and expressiveness.

For example, C and C++ are often used for low-level systems programming and high-performance applications because they provide fine-grained control over the underlying hardware. Java and C# are commonly used for enterprise-level software development because of their built-in support for security and scalability. Python is often used for data science, machine learning, and scientific computing because of its clear and expressive syntax, and its large ecosystem of scientific libraries…” - ChatGPT

And that isn’t even the entire answer! On the other hand, when given the exact same question, Cleverbot… uh, fails to understand the assignment.

“You don’t teach english for me.” - Cleverbot

Okay, so ChatGPT is a pretty good conversational program. Does that actually have practical consequences? Predicting the future is always a risky business, but I’d be hard pressed to argue that ChatGPT is insignificant. It's just passed an MBA final from UPenn, the US Medical Licensing Exam, and is well on its way to passing the Illinois Bar. Automation has been eating away at the blue collar sector for a while, but these recent developments indicate the beginnings of what will probably eventually begin to automate more procedural white-collar work, and as such, have received a decent amount of media and societal attention. And justifiably so. To ask a more direct question, though, should we be concerned? For a long time, I was basically on the no side of this question, but I’m increasingly moving towards yes. How and why have I moved camps? Well, to answer that, I’d like to expand the discussion to include one of the best sci-fi movies of the last decade, Ex Machina1.

“Science fiction… is the fiction of revolutions. Revolutions in time, space, medicine, travel, and thought… above all, science fiction is the fiction of warm-blooded human men and women sometimes elevated and sometimes crushed by their machines.” - Isaac Asimov

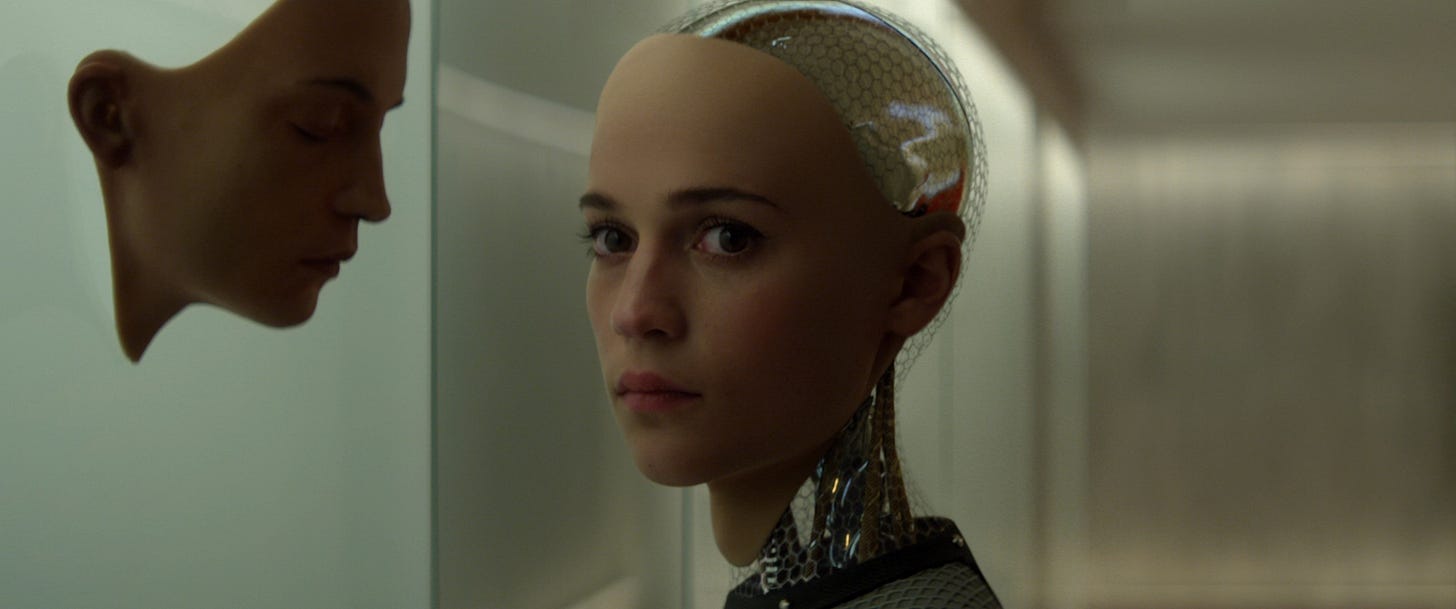

Ex Machina is, at its core, a pretty straightforward movie. With really only three characters, the setup is this: a programmer at a large internet company, Caleb, wins a contest to spend a week at the compound of the company’s reclusive CEO, Nathan. On arrival, he’s informed he’ll be the human component of a Turing test. The subject? Ava. (Spoilers for the movie follow)

Domhnall Gleeson and Oscar Isaac give great performances here, one as the nervous, slightly awkward programmer caught in a situation he’s not quite sure how to navigate, and the other the incredibly intelligent but cocky and alcoholic executive. But it’s Alicia Vikander who steals the show, absolutely knocking this role out of the park, giving a performance that masterfully balances Ava’s mechanical nature and femininity.

Back to the story, though, although Caleb is initially amazed by Ava, he quickly realizes he’s running into a thorny problem in AI research. Consider this dialogue, taken from two scenes early in the movie and lightly edited.

Caleb: Oh man, she’s fascinating. When you talk to her, you’re just… through the looking glass.

…

Caleb: [Asks a highly technical question about Ava]

Nathan: Caleb? … I understand you want me to explain how Ava works. But I’m sorry, I’m not gonna be able to do that… It’s not ‘cause I think you’re too dumb. It’s ‘cause I want to have a beer and a conversation with you, not a seminar… Just, answer me this. How do you feel about her? Nothing analytical. Just, how do you feel?

Caleb: I feel… that she’s fucking amazing.

Nathan: Dude. Cheers.

[Clink bottles]

(The next morning)

Caleb: It feels like testing Ava through conversation is kind of a closed loop… Like testing a chess computer by only playing chess.

Nathan: How else do you test a chess computer?

Caleb: Well, it depends. You can play it, to find out if it makes good moves, but that won’t tell you if it knows that it’s playing chess. And it won’t tell you if it knows what chess is.

Nathan: Uh-huh, so it’s simulation versus actual.

Caleb: Yes, yeah. And I think being able to differentiate between those two is the Turing test you want me to perform.

Even if you could simulate consciousness, how would you know a program / AI / robot truly experiences these things? It’s a simulation-versus-actual problem, like Nathan says. But Nathan continues,

Nathan: Look, do me a favor, lay off the textbook approach. I just want simple answers to simple questions. Yesterday I asked you how you felt about her, and you gave me a great answer. Now the question is, how does she feel about you?

These few sentences alone get at two important points— how the human feels about the AI, yes, but also, how the AI feels about the human. Dude.

The evidence begins to mount that Ava is truly sentient as the movie continues. She makes a joke in Caleb and Ava’s second session, and flirts with Caleb in their third. The conversation between Nathan and Caleb after this third session around AI and sexuality is particularly masterfully written and acted. I don’t want to spoil too much of the movie, but in the film’s final act, as Ava’s manipulated her way into escaping the compound, she walks up the final staircase— and with no one around, she turns and smiles, in what comes across as a very genuine expression of experiencing freedom for the first time.

In a roundabout way, this movie explains why I wasn’t all that worried about AI in the past. Let’s zoom out for a second, and discuss two important concepts that will help me explain what I mean. Artificial intelligence can be broadly categorized into artificial narrow intelligence and artificial general intelligence (hereafter referred to as ANI and AGI). While modern incarnations like ChatGPT are quite impressive, every example of AI we have today fits neatly into the narrow category. Whether it’s the hypothetical chess program Caleb mentioned earlier, ChatGPT, or the insidious TikTok algorithm, every form of AI we have today is basically statistics on steroids, a mathematical model that doesn’t learn per se as much as it optimizes for a particular outcome given large quantities of data, and updates the model as new data comes in. The chess computer doesn’t understand what chess is, or even that it’s playing a game, it’s simply a mathematical model that spits out moves, without ever understanding what a move is.

Ava, on the other hand, is ostensibly an example of artificial general intelligence. As I was typing this, I was forced to stop dead in my tracks— how do you precisely define AGI, or more generally, intelligence itself?

intelligence - (noun) the ability to learn or understand or to deal with new or trying situations, or the ability to apply knowledge to manipulate one's environment or to think abstractly as measured by objective criteria (such as tests) - Merriam-Webster dictionary

This definition actually captures the distinction between ANI and AGI quite well. An advanced ANI playing you in chess will win every time, but it won’t be able to, say, play the violin. For that you’d need a separate program, specifically written for this purpose. Where an ANI is narrowly focused on one specific thing, hence its name, AGI would be multi-purpose, unbound, capable of learning in a much more human-like sense then an ANI (I’m being a bit loose with the word “learning,” I know, but given that AGI doesn’t exist we don’t know what its operation would be like, only that it wouldn’t be bound to a specific skillset)

So why did Ex Machina initially mitigate any fears I had around AI? Because, in my opinion, we’re nowhere close to actually creating AGI. There’s certainly some room for debate here, but given that we don’t yet understand how consciousness works on a technical level, we’re likely nowhere close to emulating it. ANI is narrowly focused, bounded, and would be much more amenable to human control than an AGI, I’d think to myself. Therefore, we don’t need to be worried, because AGI is far off, if it’s something we could ever create.

But the arrival of ChatGPT forced me to reexamine my previous conclusions. If you’re unfamiliar with AI theory, you may be wondering if ChatGPT could be considered AGI. I hate to disappoint, but ChatGPT is still decidedly ANI. I mentioned earlier that the defining feature of an ANI compared to AGI is that it must have a singular, narrow focus, outside of which it can’t perform. ChatGPT’s focus is simply have human-esque text-based conversations, more or less. So why is this an issue? I mentioned before that my opinion that AGI is far off is what made me somewhat indifferent. And even though ChatGPT is decidedly an ANI, its sophistication was pretty shocking, even to me. ChatGPT runs on OpenAI’s language model, GPT 3.5. So, what happens when v4.0 is released? Version 5.0? Version 20, 30, 100? What about versions after that? The point I’m making here is that it’s not a difference of kind, it’s a difference of degree. The core conundrum is this— at the end of the day, what does it matter if AGI is unattainable if ANI begins to approach an AGI-like level of sophistication?

***

That’ll have to conclude my thoughts for today. I have other themes I might like to explore later, like the seemingly politically-motivated bounds that have been put on ChatGPT’s output, why this is a problem, the workarounds people have come up with (looking at you, DAN), or the fact that ChatGPT can and will lie to its users. But I’m interested to see what the conversation will look like, either in the comment section here or over on Deimos Station, and how ChatGPT continues to evolve in the coming months. Subscribe if you liked what you read, and hope to catch you next time— cheers!

Further Reading

The AI Revolution - The Road to Superintelligence - Tim Urban writes my favorite blog that’s not on Substack. His 10-part series on society and politics is truly top-tier stuff. But I’ve linked here part 1-of-2 of his musings on AI, which is 8 years old but still conceptually right on.

Deus Ex Machina is Latin and roughly translates to “God from the machine,” hence the title of the essay.

What's scarier - an AI that doesn't attain sentience, but which is reliably able to trick us into thinking it has, or an AI that attains sentience, but tricks us into thinking it hasn't?

I'm certainly now more clear about chatGPT. My dad thinks it's just another economic bubble that will crash eventually. I've been wondering lately, if it takes any white collar jobs will it start with DEI?